Performance vs Privacy: AI & Your Data

Each of us involved in workplace transformation and the implementation of AI solutions has a responsibility to work with an awareness of the sort of world you want to create. To protect privacy and humanity in the workplace, we must approach AI with open eyes. Because the machines are watching us…

- Amazon has patented a wristband that tracks the hand movements of warehouse workers and uses vibrations to nudge them into being more efficient.

- Workday predicts which employees will quit, using about 60 factors

- Humanyze sells smart ID badges that track employees around the office and reveal how well they interact with their colleagues

- Slack enables managers to see how quickly employees complete tasks; and note the clue in the name: Searchable Log of All Conversations and Knowledge (SLACK)

- Veriato tracks employees’ keystrokes on their computers to gauge how committed they are to their company.

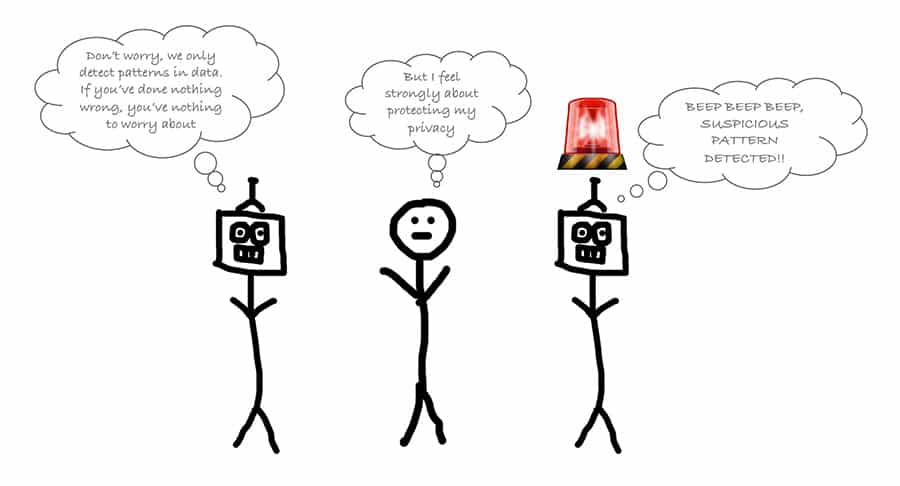

Which of the above examples is a sign of progress and which smacks of control freakery? What level of surveillance seems reasonable to you and at what point have we stepped over the line of Orwellian creepiness?

While most of us would agree that using AI powered computer vision to check if employees are wearing safety gear is a good thing, how do you feel about AI algorithms scanning for anomalies in expense claims, e.g. flagging receipts from odd hours of the night?

Also a good thing, seeing as you shouldn’t have anything to hide? What about mood tracking devices reporting to HR on your interaction style? Too Big Brother?

Back in 1999 the CEO of Sun Microsystems, Scott McNealy, famously told a group of reporters that consumer privacy issues are a ‘red herring.’ “You have zero privacy anyway,” he said, “Get over it.”

Yet regulations – like the General Data Protection Regulation (GDPR) that protects European citizens from receiving unwanted calls, emails and other comms – are a welcome response to rising privacy concerns (though less welcome to some marketers).

The question of how far to take optimization and how to use employee or customer data ethically is one that must be addressed by every organization today. To avoid dehumanizing the workplace, it’s essential to set a clear vision of the future you want to create, then take steps to protect people, e.g.

- Consider what data should be anonymized. For example, it may be best if you can see your personal productivity stats, while your manager only sees aggregated stats.

- Provide simple policies that make it clear to employees how AI and their data is being used.

- Test AI algorithms for bias and unintended consequences. For instance, a programmer’s bias could lead to an older person losing their job because they work more slowly than a younger person, if their algorithm is only interested in productivity

- Enable everyone (including ex-employees) to request their data

Ultimately, creating a digital workplace demands that we make trade-offs between performance and privacy. These are not decisions we’ll have to make some time in the future – they are decisions we should be making today, that will shape the future of work.